GATHER PROXY V13.4

GATHER PROXY V13.4

- GET FREE PROXY LIST ONLINE

- FREE PROXY SERVERS LIST

- ONLINE PROXY CHECKER

- SOCKS LIST

- WEB PROXY LIST

Gather Proxy Premium V13.4 is a powerful and easy— to—use application that lets users use servers and proxy. It gives complete information about any cormtry, time, level, and many different things. The great thing is, it doesn't let your network operators track your status.

Moreover, it gives no trace in the Windows files or on a local road. The device is quite manageable to copy any room device and also USB stick.

Gather Proxy Premium Improoved Version V13.4 Free Download has a simple, user—friendly interface and a clear screen. It gives information linked to the proxy within several seconds. Also, this proxy grabber application is a lightweight Windows service that can quickly get proxy and socks. As it's small, it provides no trace. Plus, you can pick the proxy server for Chrome, Opera, Internet Explorer, and even Firefox.

Gather Proxy Premium V13.4 is a simplistic and portable program that helps users create a proxy server and socks lists while transferring data about the proxy server, latest update, area, and time. This tool is a tiny in—sized Windows utility application that enables you to collect proxy servers and clothes data. The program also gives a free proxy list, free agent server list, free socks, websites, and unblocked sites. Furthermore, it also gives the most secure proxy server list, socks, and web tools.

1. Basic Settings:

First you have to set "Number proxies display in listview" và "Limited proxies when importing and harvesting" by clicking the "Advance" button. This is very important, it determines how many proxies you will get when importing or harvesting. Remember to tick the box "Save current settings when close program" so that the program saves the settings for the next session.

2. Gather Proxy:

ঔৣ Custom Url source expressions ঔৣ

By default you will use website sources from GatherProxy for harvesting proxies. But you can also use your custom url sources by select My URLs option. When using this option, gatherproxy provide some expressions, them allow grabber proxies with very smart methods.

o

#date# - Replace this text by current date on your PC.

Example: if today is 2013-07-24 and i have a link:

http://google.com/search?q=proxy+server+list+#date# This link will be:

http://google.com/search?q=proxy+server+list+2013-07-24 when program scraping.

o

#date-1# - Replace this text by yesterday of current date on your

PC.

o

#loop(startval,endval,counter)# - This option allow you loop pages. Its usefull and save

your times if you want to grab all pages in a topic.

Example: I have a link: http://example.com/forum/threads/topic-name/1.html This

is page 1 of "topic name". This topic have 15 pages and i want to

grabber all without copy manual.

http://example.com/forum/threads/topic-name/#loop(1,15,1)#.html This url will

return 15 urls following when program scraping

http://example.com/forum/threads/topic-name/1.html

......................................................

......................................................

......................................................

http://example.com/forum/threads/topic-name/15.html

Explain for parameters:

startval - value start

endval - value end

counter - plus "counter" each time

loop

Ex: #loop(10,30,5) returns: 10 - 15 - 20 - 25 - 30

o

#deep(1)# - This is so great feature for you. You can using it for deep

scan url. (this expression must at end of link)

Example:

http://google.com/search?q=proxy+server+list+#date##deep(1)# it will return

http://google.com/search?q=proxy+server+list+2013-07-2013#deep(1)# Then deep scan this url. What is the deep scan?

Yep, the program will go this url, grab all links from that url and harvest

proxies from links grabbed. Please look my video demo for clarify. Another

Example:

http://google.com/search?q=proxy+server+list+#date#&start=#loop(10,30,10)##deep(1)#

This url allow you scrape 3 pages from google search.

o

#dfil(keyword)# - This expression availbe if you are using #deep(1)#

function. It allow you filter url scraped.

Ex: if you want to scrape all thread in a example.com forum but just threads

with url contain keyword "scrapebox":

http://example.com/forum/f30-proxy-lits#deep(1)##dfil(scrapebox)#

hey only socks 4 5 no proxy ¿?¿?¿? fixed? for proxies?

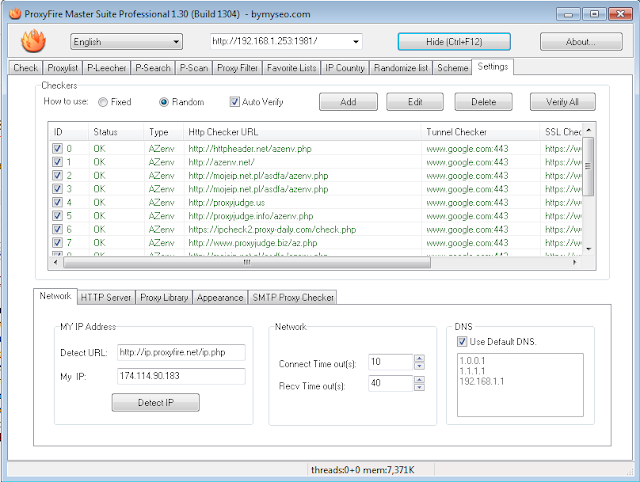

ReplyDeleteyou should use the tool "ProxyFire Master Suite Pro v1.30 build 1304" to check, to avoid overloading the hosts containing the proxy judge, resulting in incorrect results.

DeleteThe public proxy judges will frequently report mixed results. That is to say, out of 100 requests to the judge, maybe 40-50 requests actually go through properly causing working proxies to report as dead. The lack of stability is hard to detect and results in users missing out on valuable proxies.

ReplyDeleteTherefore, you should use a tool that can random the the proxy judge hosts: "ProxyFire Master Suite Pro v1.30 build 1304".

page download error

ReplyDelete